Reflections in a Spoon: Why We Need More Than Algorithms to Shape Our Worldview

From flipped spoon reflections to AI biases, this is a story about staying humble, seeking new viewpoints, and remembering we still need other humans to think well

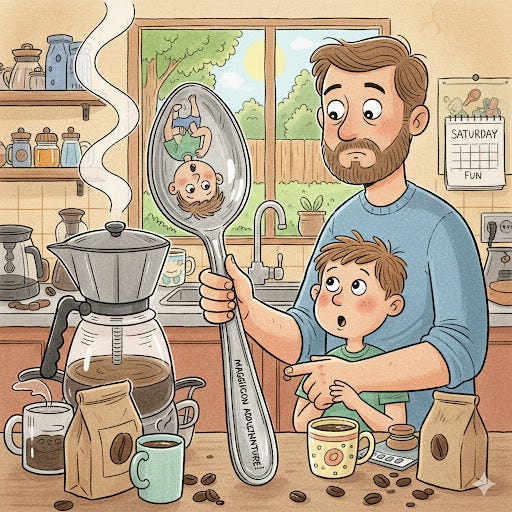

The other morning, I was making coffee with my son when a simple spoon turned into a lesson I don’t want him to forget.

We were looking at our reflection in the spoon. On one side, our faces looked “normal.” Flip it around, and suddenly we were upside down. Nothing else in the kitchen changed—same light, same people, same moment. All that changed was the angle. A tiny shift, and the whole image was transformed.

It struck me how similar this is to the way we hold our beliefs.

Most of the time, we walk around assuming our view is the “right side up.” Our thoughts feel true simply because they are ours. But like that spoon, a slight change in perspective can flip the whole picture. What once felt obvious can suddenly look incomplete—or even wrong.

If we’re not careful, our digital tools can subtly become mirrors that only reflect our existing beliefs back to us.

Now, layer in the world we live in.

Algorithms quietly surround us, learning our preferences and feeding us more of the same. Netflix suggests the shows we’re likely to enjoy. Amazon recommends products we’re likely to buy. Large language models and AI systems do something similar with words: they pull in information based on our past prompts and interactions, giving us answers that feel tailored, familiar, and comfortable.

There’s nothing inherently bad about this. Relevance is useful but we need to be aware of the hidden risks.

If we always ask questions in the same way, from the same angle, we’ll keep seeing the same version of ourselves—“right side up,” even when we might be upside down.

But even when we try to change angles, there’s another layer we need to keep in mind: the AI models themselves are not neutral.

Recent research published by Oxford University Press compared large language models to global survey data shows that many LLMs tend to reflect cultural values closer to English-speaking and Western European countries by default—especially around things like self-expression and social values. In other words, if you ask a model for “a general opinion,” you might actually be getting “a Western-leaning opinion,” even if that’s not your culture or context.

Here is an overview of the research, generated by NotebookLM.

That doesn’t mean these tools are useless—it just means we should use them with awareness.

So how can we flip the spoon on our thinking in practice?

“What might I be missing?”

“Give me the strongest argument against my position.”

“How would someone from a different culture or background see this?”

We can use AI as a tool to question ourselves, not just to comfort ourselves.

But as powerful as this is, there’s one thing AI can’t replace: other humans.

At the end of the day, we’re social creatures. We grow by bumping into other minds, especially the ones that don’t think like we do. No model can substitute for sitting with a real person from a different background, listening to their story, their fears, their hopes, and their worldview.

The cycle I hope my son learns is this:

Expose yourself to different views—through people, books, and yes, even AI.

Listen with an open mind, especially when it’s uncomfortable.

Then step back and think for yourself. Form your own worldview, knowing it will keep evolving.

I often think about Socrates’ quote: “All I know is that I know nothing.”

To me, it’s a daily reminder to hold our thoughts lightly, to let new ideas challenge us, and to stay curious about where we might be wrong.

If there’s one thing I’d like my son to carry with him, it’s this:

You don’t have to be certain to be grounded. All I really know is that love makes everything better.

So next time you’re sipping coffee, scrolling your feed, chatting with an AI, or talking to someone whose life looks nothing like yours, think about that spoon. Ask yourself:

What if I’m seeing this upside down?

What’s one angle I haven’t considered yet?

Sometimes, all it takes is a small flip in perspective to see a different truth.

If you’d like to see how this works, here’s a short video by Integral Physics that walks through why our reflection looks different on each side of the spoon.

References:

Yan Tao, Olga Viberg, Ryan S Baker, René F Kizilcec, Cultural bias and cultural alignment of large language models, PNAS Nexus, Volume 3, Issue 9, September 2024, pgae346, https://doi.org/10.1093/pnasnexus/pgae346