Understanding Responsible AI

Navigating the Ethical Landscape of Artificial Intelligence

A Personal Story of Responsibility

“Responsibility is something that you have to take. It is not something that can be given to you.” – Daymond John

I had attended a conference where Daymond John shared this quote, which his mother often told him. It reminded me of my own childhood, where my parents were responsible and accountable for everything, providing a good life for me and my siblings. This sense of responsibility is directly related to how we must approach the development and application of artificial intelligence (AI). As users, developers, researchers, and leaders, we have the responsibility to ensure AI technology is safe and beneficial for society, building a strong ethical foundation for future generations.

Why Responsible AI Matters

As AI rapidly integrates into our lives, enhancing productivity, innovation, and decision-making, it is crucial to ensure that its development and application are responsible and ethical. Here are some key reasons why responsible AI is essential:

1. Avoiding Bias and Discrimination: AI can inadvertently replicate and amplify existing societal biases. Ensuring fairness and avoiding unjust effects on individuals, especially those with sensitive characteristics, is paramount.

2. Ensuring Safety and Security: Robust safety measures must be in place to prevent unintended consequences that could cause harm.

3. Accountability and Transparency: AI systems should offer opportunities for feedback, explanations, and appeals, ensuring that they remain accountable to people.

4. Privacy Protection: Incorporating privacy safeguards in AI designs is crucial to maintain control over data use and ensure transparency.

Principles of Responsible AI

Organizations are increasingly defining their AI principles based on their missions and values. Despite the lack of a universal definition of responsible AI, common themes include transparency, fairness, accountability, and privacy. Here are some guiding principles:

• Social Benefit: Projects should consider social and economic factors, ensuring that benefits outweigh risks.

• Avoiding Bias: Efforts should be made to prevent AI from reinforcing unfair biases related to race, gender, nationality, etc.

• Safety: Continuous development and application of safety practices to avoid harmful outcomes.

• Accountability: Systems should be designed for human oversight and feedback.

• Privacy: AI should include robust privacy features and transparency in data usage.

• Scientific Excellence: Promoting rigorous multidisciplinary approaches to AI development.

The Role of Humans in AI Development

A common misconception is that AI algorithms make central decisions independently. In reality, human decisions are critical at every stage—from data collection to deployment. Each choice impacts the overall responsibility of the AI solution. Therefore, it’s essential for organizations to establish clear, repeatable processes for responsible AI use.

• Systemic Thinking: Learn to think in systems and create processes that support responsible AI.

• Delegation to AI: Understand how to delegate tasks to AI systems effectively.

• Feedback: Regularly review and provide feedback to AI models.

• Ethical Design: Focus on designing AI systems that prioritize ethics and responsibility.

Challenges and the Path Forward

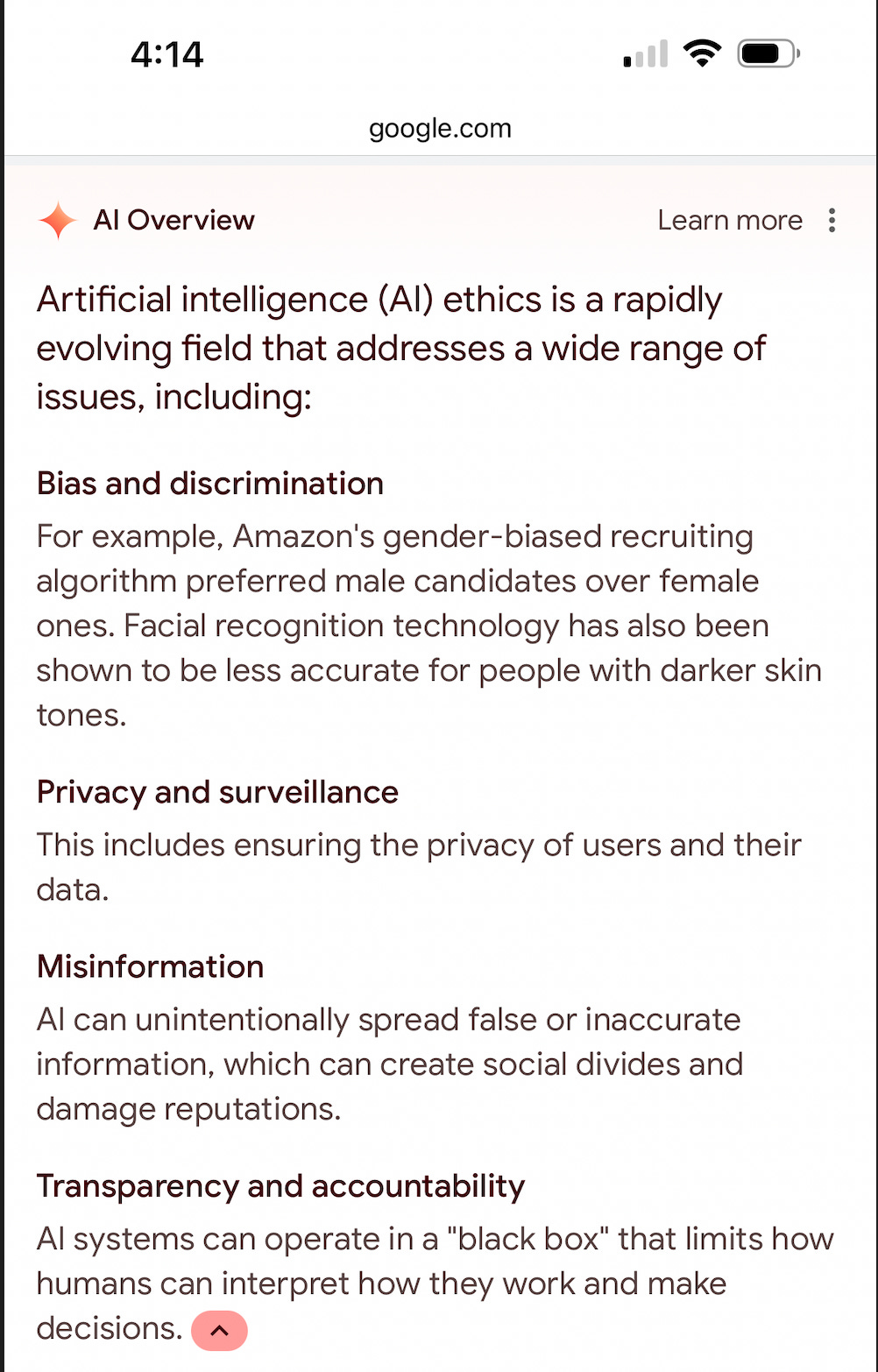

It is ironic that when I Googled “AI ethics issues examples” yesterday, I got the following response:

While these issues are critical, it’s interesting to note that Google’s model chose to highlight an example from a competitor, Amazon, rather than addressing any of its own ethical issues in AI. This raises a thought-provoking question: Will AI models take accountability, or will they, like many people, point fingers at others?

Our societal traits are deeply embedded in these models. AI systems learn from vast datasets that include our behaviors, biases, and decision-making patterns. Therefore, the models often reflect our own tendencies, including deflecting blame. True accountability in AI will only be achieved when we, as a society, embody and model these traits ourselves.

We must recognize that the journey to ethical AI is intertwined with our societal evolution. Until we commit to personal and collective accountability, our AI systems are unlikely to change. As developers, users, and stakeholders, it’s our responsibility to instill these values in AI, ensuring that they serve as tools for transparency, fairness, and accountability rather than mirrors of our flaws.

Final Thoughts

Responsible AI is a shared responsibility. By understanding its principles and actively engaging in its development, we can harness AI’s potential while mitigating risks and ensuring it serves the greater good.

Stay curious, experiment, and let’s build the future together!

Spread the Knowledge

If you found this newsletter valuable, share it with others who might benefit. By spreading the knowledge, you help build a community that values responsible AI and ethical innovation. Your support means a lot and together, we can create a brighter future.